What is Bayesian Reasoning?

Bayesian reasoning is a statistical and probabilistic technique that attempts to help figure out how likely something is based on two things: what you already know and new information you’ve just learned.

For example, let’s say there’s a rare disease that only affects 1 in 1,000 people. If someone takes a test for the disease and it comes back positive, does that mean they definitely have it? Not necessarily. You have to think about:

• How rare the disease is (what you already know).

• How accurate the test is (the new information).

By combining these, you can figure out how likely it is that the positive result actually means the person has the disease. This is what Bayesian reasoning does—it combines existing knowledge with new evidence to improve your understanding.

Why It’s Important in Real Life

Bayesian reasoning is important in situations like medical testing, where making the right decision can have serious consequences.

• It avoids jumping to conclusions: A positive test result doesn’t always mean the person has the disease, especially if the disease is rare or the test makes mistakes. Bayesian reasoning helps doctors think carefully about these situations.

• It combines evidence and context: Doctors can use test results (evidence) along with how common the disease is (context) to decide how worried they should be.

• It adapts with more information: If another test result comes in or new symptoms appear, Bayesian reasoning helps refine the diagnosis.

Caveats

Bayesian reasoning can be flawed if the inputs—like the estimated disease prevalence (prior knowledge) or test accuracy (new evidence)—are wrong or uncertain. If the starting assumptions are off, the conclusions will be too. It should be clear that the evidence must relate to the hypothesis. That is essential, but not always obviously right or wrong.

It’s also hard to apply when there’s not enough reliable data or when the situation is too complex to confidently estimate probabilities. In these cases, the results might seem precise but could actually be misleading.

Working Through the Logic

We're going to look at Bayesian statistical reasoning through the lens of the comedic disease called Lurgy, as outlined by the Goons on the UK radio show The Goon Show in the middle of the last century. We will base this discussion on a list of symptoms as given by Spike Milligan's books.

As the Lurgi is a creation of satire, there are no real-world statistics on its incidence. However, for the purpose of hypothetical analysis on April 1, 1950, one might whimsically assume an outbreak affecting a portion of the population, given the comedic portrayal of its rapid spread in the show.

Lurgi, as a fictitious disease popularized by Spike Milligan and the Goons in the 1950s was presented in The Goon Show episode titled "Lurgi Strikes Britain." It was broadcast on November 9, 1954, where the disease was humorously depicted with symptoms such as an uncontrollable urge to exclaim "Eeeeyack-a-boo."

As far as I can remember, Goon Show cast member Spike Milligan humorously described the Lurgi as having symptoms such as:

• An irresistible compulsion to play the trombone.

• An uncontrollable urge to exclaim "Eeeeyack-a-boo."

• Rolling up one's trouser cuffs to an unusual length.

These whimsical symptoms align with the comedic and absurd style characteristic of Milligan's work. However, without direct excerpts from the book, I cannot confirm these details with certainty.

We Present a Story

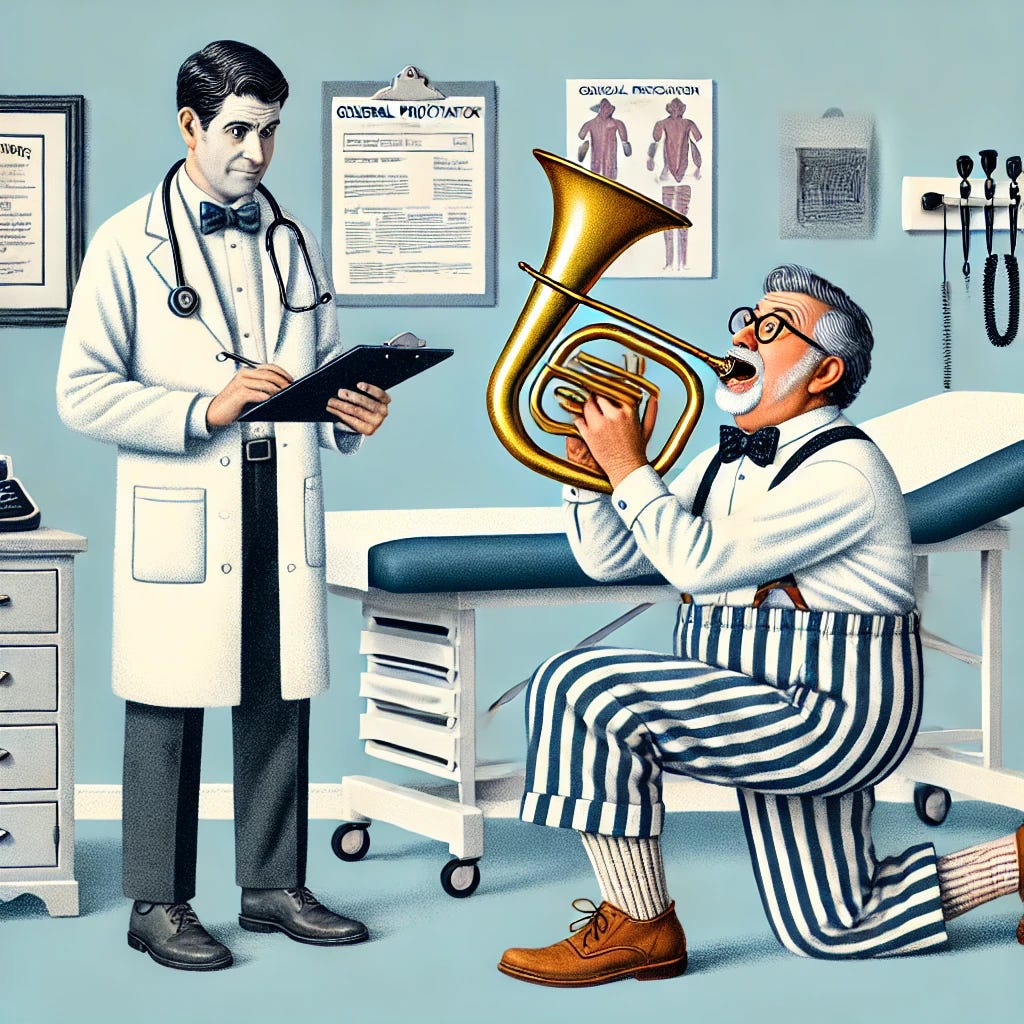

So here's the fictitious story. It's going to be illustrative, based on the comedic Lurgy disease. A person, on April 1st, 1950, presented himself at his physician's office, claiming he had the Lurgy. The incidence of Lurgy at that time in the population in his district was one in a thousand. The doctor was curious about his claim, but the doctor had several diagnostic instruments at his disposal. He knew the incidence was one in a thousand, so it was a rare disease at the time. The doctor hoped there wasn't an outbreak going on, but, anyway, it had been stable for years, and the baseline seemed to not change very much. The doctor had one solid diagnostic test. He knew that if a patient had Lurgy, when shown a picture of a trombone, they would drop to their knees, walk around on their knees, and shout, "Eeeeyack-a-boo," at the top of their lungs for about five minutes, looking for a trombone.

Let’s assume an incidence (hypothetical) on April 1, 1950, of the disease of 1/1,000. We can call this our probabilistic hypothesis or “Prior” in statistical jargon.

We want to find out how likely it is that the individual has the disease, looking at the incidence and the new information, the evidence, provided by the diagnostic test. We call the conclusion, which is calculated as a probability, the “Posterior.”

This diagnostic test had about a 99% chance of finding the condition. That is, usually, when people had the disease, the test would find the disease if it existed in the person. The test had a 1% chance of missing the disease when the person had it. The former is called the “true positive rate” or, even more technically, the sensitivity of the diagnostic test. The latter is called the “false negative rate” and is mathematically the one’s complement (subtract from 1, since probabilities always sum to 100%) of the “true positive rate.”

Sometimes the test would over-diagnose things, and that's called the selectivity, or ability to discriminate. The test would give a false alarm 10% of the time, mistakenly claiming that people had the disease when they did not. This is called the “false positive rate.” The one’s complement of this is the “true negative rate” or correct diagnosis of no disease.

Here is a recap of the rates for true positives, true negatives, false positives, and false negatives:

• True Positive Rate (Sensitivity): 99%

• True Negative Rate (Specificity): 90%

• False Positive Rate: 10%

• False Negative Rate: 1%

There are two complementary pairs of rates, which always sum to 100% because they describe opposite outcomes:

True Positive Rate (Sensitivity) and False Negative Rate:

◦ Sensitivity (True Positive Rate): The percentage of actual cases correctly identified.

◦ False Negative Rate: The percentage of actual cases missed by the test.

◦ Complement: True Positive Rate + False Negative Rate = 100%.True Negative Rate (Specificity) and False Positive Rate:

◦ Specificity (True Negative Rate): The percentage of non-cases correctly identified.

◦ False Positive Rate: The percentage of non-cases incorrectly identified as positive.

◦ Complement: True Negative Rate + False Positive Rate = 100%.

These pairs reflect how the test distinguishes true conditions (positive or negative) from errors.

I have a thinking problem with the words "true positive" and "false positive," "true negative" and "false negative," because they are not something I can relate to in terms of imagery or everyday experience. I think it's much easier to say you've missed some cases, you've found some cases, you've misdiagnosed some cases by making a mistake that they exist when they don't, and you've properly diagnosed some cases by making it clear that they don't have the disease. So the whole language of "true" and "false" and "positive" and "negative" is kind of hard to fathom sometimes. Using plain language like "missed cases" or "found cases" can make these concepts clearer and more intuitive.

Here’s a simple explanation of the four possible outcomes for a medical test. This plain language avoids the confusion of "true/false" and "positive/negative" terminology:

Found Cases (99%): If someone truly has the disease, the test correctly identifies them 99% of the time.

Missed Cases (1%): If someone truly has the disease, the test fails to identify them 1% of the time.

Misdiagnosed Cases (as existing) (10%): If someone does not have the disease, the test mistakenly says they do 10% of the time.

Properly Diagnosed Cases (as not having it) (90%): If someone does not have the disease, the test correctly identifies them as healthy 90% of the time.

These percentages represent how well the test performs in different situations.

The incidence probability means how common the disease is in the population. In this case, the disease affects 1 out of every 1,000 people (0.1%). This means that, on average, if you randomly pick 1,000 people, only one of them is expected to have the disease. It tells the doctor how rare or frequent the disease is.

We give a date and time because the incidence is relevant to a particular time period. The incidence had remained constant over many years, but the incidence on April 1st, 1950, was one in a thousand.

The incidence probability on April 1, 1950, was 1 in 1,000 people (0.1%). This means that, on that specific date, in the doctor's district, for every 1,000 people in the population, only one person was expected to have the disease. The doctor also knew that this incidence had remained stable over many years, so there was no evidence of an outbreak or unusual increase in cases at that time. This helps frame how rare the disease was during that period.

We review the symptoms that we've hypothesized for Lurgy:

1. Urge to play the trombone

2. Dropping to knees and shouting "Eeeeyack-a-boo" repeatedly

3. Rolling up trouser cuffs to an unusual length

The diagnostic test we're planning on using employs technical terms, specificity and sensitivity, but these can be better explained using plain English instead of those technical terms.

The diagnostic test works by showing a picture of a trombone to the person. If they have the disease, they will drop to their knees, walk around on their knees, and shout "Eeeeyack-a-boo" for five minutes.

• How well it finds people with the disease: The test is very good at identifying those who actually have the disease, correctly finding them 99% of the time.

• How often it mistakes healthy people for having the disease: The test occasionally gets it wrong and says someone has the disease when they don’t, which happens 10% of the time.

We combine the sensitivity numbers with the incidence number to come out with the joint probability.

To combine the test's accuracy (how well it finds cases) with how rare the disease is (the incidence), we think about how often the disease actually shows up in the population and how good the test is at catching it.

Start with the incidence: Only 1 in 1,000 people actually has the disease (0.1%).

Then, consider how well the test finds those people: The test correctly finds 99% of the people who have the disease.

To figure out the chance of both having the disease and being identified correctly (the joint probability), multiply the two percentages:

◦ 0.1% (1 in 1,000) × 99% (test accuracy for finding cases) = 0.099%.

This means about 0.099% of the total population is both sick and identified correctly by the test.

However, the joint probability is not considered sufficient. So, instead, they take the specificity and the sensitivity, combine them, and divide that into the joint probability. So I need to know just why that is done. What's the rationale for it?

The reason we divide the joint probability by the combined sensitivity (how well the test finds sick people) and specificity (how well it avoids mistakes) is to adjust for the fact that the test doesn’t just look at sick people—it looks at everyone, sick and healthy.

By dividing, we account for how often the test gives correct results in the whole population. This step ensures that we don't overestimate the likelihood of someone having the disease just because the test says so, especially when the disease is rare. It essentially "scales" the joint probability to reflect how reliable the test is overall when applied broadly.

Let me clarify with examples why dividing by the total reliability of the test (sensitivity and specificity) is necessary and how it works. This helps us refine our understanding of the test’s results, especially when dealing with rare diseases.

Without Scaling (What Happens)

If you just use the joint probability (chance of having the disease and testing positive), you ignore the fact that the test makes errors. For example:

• Rare Disease: Only 1 in 1,000 people has the disease.

• False Positives: 10% of healthy people wrongly test positive.

If you ignore the false positives and just rely on the joint probability, you’ll drastically overestimate how many positive test results mean someone actually has the disease. Why? Because even with a good test, far more people will falsely test positive than truly have the disease if the disease is rare.

How Scaling Fixes This

When we divide by the test’s overall reliability (including sensitivity and false positive rates), we adjust for all possible test outcomes across the whole population. This ensures that we’re not just looking at how many people tested positive, but rather:

• How likely a positive test actually means the person is sick (posterior probability).

Example

Imagine 10,000 people are tested:

Disease Incidence: 1 in 1,000 → 10 people have the disease.

Sensitivity: 99% → 9.9 (round to 10) are correctly identified.

False Positives: 10% of 9,990 healthy people → 999 test positive incorrectly.

Without scaling, you’d only look at the joint probability (10 real cases), ignoring the 999 false positives. But scaling by the overall reliability ensures we compare true positives to the much larger pool of people who tested positive overall (10 true positives vs. 999 false positives). This adjustment shows the real chance of being sick is much lower than it first seems.

Why Scaling Works

Scaling ensures that probabilities account for the test’s tendency to make mistakes (false positives and false negatives). Without it, the test’s results would mislead you, especially in cases where the disease is rare or the test has a high false positive rate.

Appendix A - A Slightly More Technical Explanation

1. Introduction to Bayesian Reasoning and Its Components

Bayesian reasoning combines prior knowledge with new evidence to refine our understanding of probabilities. The process involves three key elements:

Prior Probability (P(Disease)): This represents the initial belief about how likely the disease is before considering any test results. In the example, the prior is the incidence of the disease, 1 in 1,000 (0.1%).

Likelihood (P(Positive Test | Disease)): This is the probability of getting a positive test result if the person truly has the disease. This is determined by the test’s sensitivity and specificity:

Sensitivity measures how well the test identifies true cases (99% in this example).

Specificity measures how well the test avoids false positives (90%).

Posterior Probability (P(Disease | Positive Test)): This is the updated probability that someone has the disease after taking the test. It combines the prior probability and likelihood, adjusted by the evidence (P(Positive Test)).

2. Why Scaling is Necessary

When calculating the posterior probability, the scaling step adjusts for how common positive test results are in the entire population. Without this adjustment, the rarity of the disease and the occurrence of false positives could distort the results.

Key Idea: Scaling ensures the probability reflects real-world conditions, accounting for both true and false positives.

3. Step-by-Step Example with Bayesian Calculations

Imagine testing 10,000 people for a rare disease (incidence = 1 in 1,000, sensitivity = 99%, specificity = 90%):

Prior Probability (Disease Prevalence):

Only 1 in 1,000 people has the disease → 10 people in the population of 10,000 are sick.

Likelihood (Test Performance):

Sensitivity (True Positive Rate): The test correctly identifies 99% of the 10 sick people → 0.99×10=9.90.99×10=9.9 (rounded to 10 true positives).

Specificity (True Negative Rate): The test correctly identifies 90% of healthy individuals → 0.90×9,990=8,9910.90×9,990=8,991 (true negatives).

False Positives: The remaining 10% of healthy individuals test positive → 0.10×9,990=9990.10×9,990=999.

Evidence (P(Positive Test)):

This includes all positive test results, both true and false: P(Positive Test)=P(Positive Test | Disease)⋅P(Disease)+P(Positive Test | No Disease)⋅P(No Disease)P(Positive Test)=P(Positive Test | Disease)⋅P(Disease)+P(Positive Test | No Disease)⋅P(No Disease)

P(Positive Test | Disease)=0.99P(Positive Test | Disease)=0.99, P(Disease)=0.001P(Disease)=0.001

P(Positive Test | No Disease)=0.10P(Positive Test | No Disease)=0.10, P(No Disease)=0.999P(No Disease)=0.999

P(Positive Test)=(0.99×0.001)+(0.10×0.999)=0.00099+0.0999=0.10089P(Positive Test)=(0.99×0.001)+(0.10×0.999)=0.00099+0.0999=0.10089

Posterior Probability:

Using Bayes’ theorem: P(Disease | Positive Test)=P(Positive Test | Disease)⋅P(Disease)P(Positive Test)P(Disease | Positive Test)=P(Positive Test)P(Positive Test | Disease)⋅P(Disease) Substituting the values: P(Disease | Positive Test)=0.99×0.0010.10089≈0.0098 (or 0.98%)P(Disease | Positive Test)=0.100890.99×0.001≈0.0098(or 0.98%)

This means that even with a positive test result, the chance of actually having the disease is only 0.98% due to the rarity of the disease and the occurrence of false positives.

4. Why Dividing by Evidence is Crucial

Without scaling by P(Positive Test)P(Positive Test), the joint probability P(Positive Test | Disease)⋅P(Disease)P(Positive Test | Disease)⋅P(Disease) would not reflect the test’s behavior in the broader population, including false positives. Scaling ensures the results are realistic and not overly optimistic about the test's performance.

5. Intuitive Explanation for False Positives and Scaling

When a disease is rare:

The vast majority of people do not have the disease.

Even a small false positive rate (10% here) can lead to many more false positives than true positives, simply because there are far more healthy people.

Scaling adjusts for this imbalance, ensuring the posterior probability accurately reflects the likelihood of being sick after a positive result.

6. Simplifying Sensitivity and Specificity

Plain language explanations can help clarify these concepts:

Sensitivity: How good the test is at catching people who are sick. Think of it as the test’s ability to "find cases."

Specificity: How good the test is at avoiding false alarms. Think of it as the test’s ability to "avoid mistakes."

When combined, these metrics ensure that the test results are as reliable as possible for both sick and healthy populations.

7. Conclusion

Scaling by the total probability of a positive test ensures that the test’s outcomes are evaluated realistically, considering both true positives and false positives. Bayesian reasoning accounts for the rarity of the disease and the accuracy of the test, providing a refined and actionable understanding of the results. The step-by-step process and logical scaling adjustment make the reasoning robust and conceptually sound.