Reason: Proof, Probability, and the World — On Cognitive Grounding, Irrelevance, and the Limits of Replication

Discussion of Mathematics in Open, Unstable Domains

Author’s Preface

This essay consolidates and systematizes a set of claims developed over many discussions. The aim is to distinguish mathematical results proved within formal systems from claims about the real world, to explain why those bridges rest on human judgment rather than logical necessity, and to show why replication and “applicability” are constrained or incoherent in open, unstable systems. The treatment is deliberately plain-spoken. No step relies on Platonism. The ground is cognitive, linguistic, and empirical.

Introduction

Mathematical theorems connect assumptions to conclusions inside formal systems. That is all they do. Claims that a theorem’s conclusions hold in the world are extra—theoretical imports that depend on empirical warrant and on whether the theorem’s assumptions capture what actually matters in the domain. In practice, two widespread meta-assumptions are often smuggled in without scrutiny:

1. If the assumptions hold in reality, the theorem’s conclusions hold in reality.

2. If the assumptions do not hold in reality, the theorem’s conclusions do not hold in reality.

Both are conjectures. Neither is a logical consequence of the theorem. They hinge on relevance—on whether the assumptions track the operative features of the world.

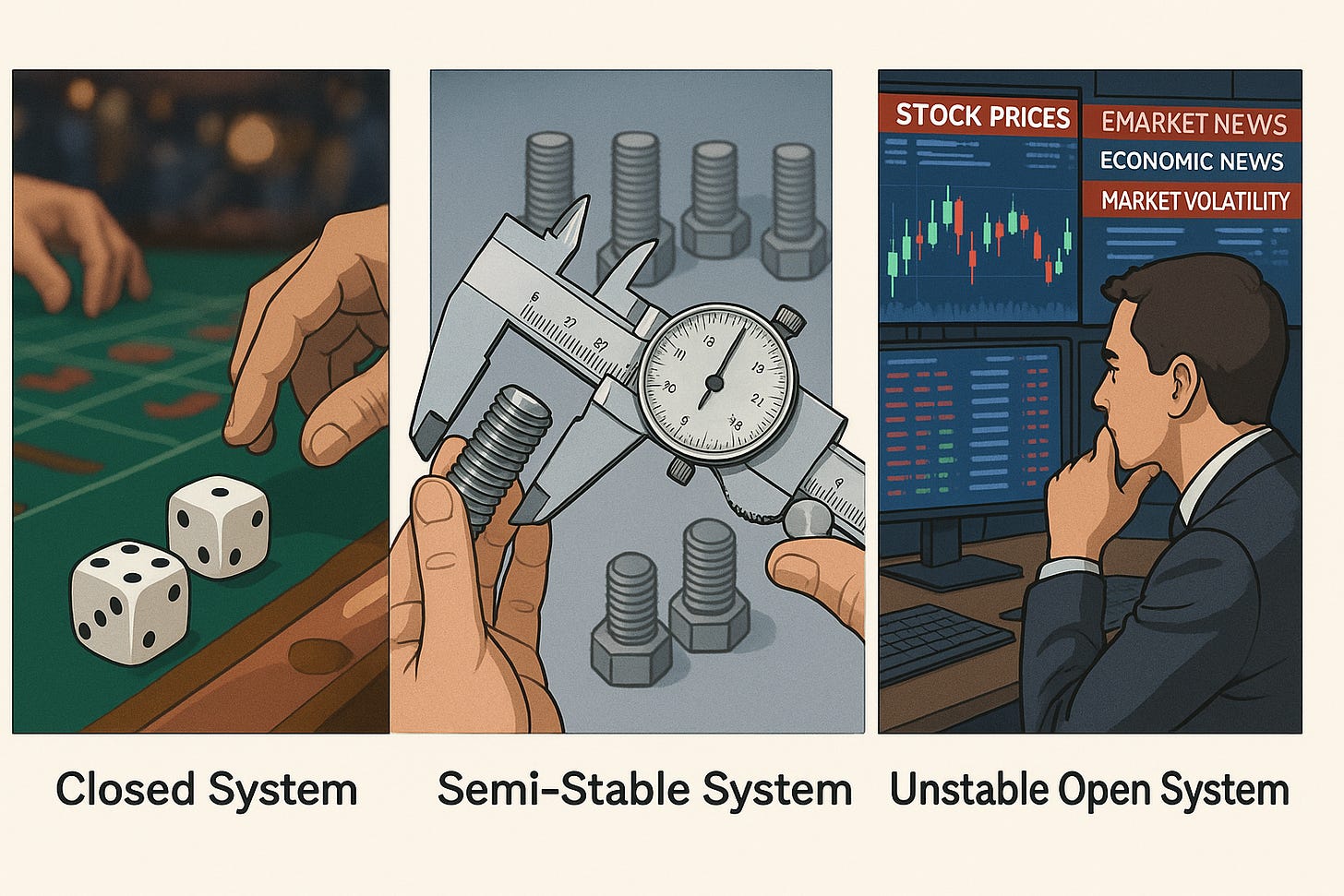

A second, deeper point concerns replication. In closed systems (dice, cards, simple physical randomizers), replication is coherent and enables direct checks of model–world fit. In open, unstable systems (medicine, macroeconomics, social behavior), strict replication is impossible in principle; only re-performances under changed conditions are feasible. That difference undermines the idea that theorems such as the Central Limit Theorem (CLT) can be empirically “proved” in those domains.

A third point concerns proof itself. Absent Platonism, proof is a human practice: structured argument in a specialized dialect of natural language, presented to and ratified by a community. Its authority is cognitive and social, not metaphysical. The same holds for the step from mathematics to the world: only empirical performance can authorize applicability, and only within limits set by system stability.

Discussion

1) Core Principles

1.1 Principle of Cognitive Grounding of Proof

Statement. Without appeal to a non-physical realm, a proof is a structured act of human argumentation conducted in language (natural and symbolic). It exists only insofar as it is produced, understood, and accepted by a community of human thinkers.

Implications.

Symbols, axioms, and rules are human inventions.

Meaning is fixed by practice and pedagogy, not intrinsic to marks.

Mechanical verification does not escape interpretation: humans choose the system, encode the claim, and interpret the output.

Proof authority is contingent and revisable; it rests on understanding and sustained communal assent.

1.2 Principle of Theorem–World Irrelevance

Statement.

A theorem binds assumptions to conclusions only within its formal system. Transporting those conclusions to the world requires a relevance claim: that the assumptions articulate the features that actually control outcomes for the task at hand.

Two meta-assumptions (both conjectural).

Positive: “Assumptions true ⇒ conclusions true in the world.”

Negative: “Assumptions false ⇒ conclusions false in the world.”

Either may fail.

A method can succeed despite violated assumptions (because the violations are irrelevant to the objective) or fail despite satisfied assumptions (because the assumptions miss what matters).

1.3 Principle of Impossibility of Replication in Open Systems

Statement. In open, unstable systems—where conditions drift, units are non-exchangeable, and interventions feed back—strict replication is impossible in principle. Only re-performances under shifted regimes are feasible, so the textbook role of replication as a decisive test collapses.

Consequences.

Applicability of a theorem cannot be verified as in closed systems.

“Replication failure” may reflect system change rather than method misuse.

Even perfect formal fit, if achievable, would not ensure relevance.

1.4 Principle of Non-Proveability of CLT Applicability in Unstable Systems

Statement.

The CLT is a mathematical result. In unstable open systems, there is no principled path to proving that aggregated variables behave as the CLT predicts. At best, one can establish local, provisional adequacy for narrow tasks over short windows.

2) Closed, Semi-Stable, and Open Systems — A Clean Partition

2.1 Closed Systems

Definition.

Finite, enumerable outcome spaces; stable mechanisms; repeatable trials (dice, cards, simple lotteries).

Testing.

Direct frequency checks under controlled repetition.

Relevance.

High: assumptions mirror the physical symmetries that fix probabilities.

2.2 Semi-Stable Industrial Systems

Definition.

Controlled processes with mild drift (manufacturing lines, regulated chemical processes).

Testing.

Ongoing monitoring against expected rates (e.g., “three-sigma” rules for batch means).

Relevance.

Often adequate because the objective (detecting shifts) can be served by simple rarity thresholds even when normality or independence is only approximate.

2.3 Unstable Open Systems

Definition.

Mechanisms evolve; units differ in consequential ways; actions feed back (medicine, macroeconomics, social behavior, financial markets, emerging epidemics).

Testing.

Strict replication impossible; only localized, prospective performance checks make sense.

Relevance.

Always an empirical question; no theorem licenses itself.

3) Assumptions: Truth vs. Relevance

3.1 The Four-Cell Map

Consider two axes: assumptions (true/false) and relevance (relevant/irrelevant to the objective).

True & Relevant → Ideal success.

True & Irrelevant → Mathematically tidy, practically pointless.

False & Irrelevant → Method may still work; robustness by accident or design.

False & Relevant → Failure; key structure is missed.

The usual curriculum lives in the first cell. Real practice often happens in the other three.

3.2 Exchangeability vs. Sameness

Open-system practice often swaps “identical trials” for “exchangeable cases,” i.e., cases treated as if order and identity do not matter. That as if move is the leap. It needs evidence, not a theorem.

4) The Central Limit Theorem — What It Actually Provides

The CLT asserts: under stipulated conditions (independence or weak dependence, identical distribution, finite variance), standardized sums/means converge in distribution to a normal law. This is an internal claim. It says nothing about:

Whether those conditions hold in a given domain;

Whether the mean is the right target (as opposed to tails, thresholds, or heterogeneity);

Whether system drift will invalidate any local success.

Invoking the CLT as a universal smoother (“large samples make it normal”) is rhetoric, not a license.

5) Replication: Coherent in Closed Systems, Conceptually Broken in Open Systems

5.1 Coherent Replication

In closed systems, the setup is repeatable in the ways that matter. Model–world fit can be checked by frequency. The theorem–world bridge is testable.

5.2 Conceptual Breakdown

In open systems, every new study is a new situation. Divergence among results cannot be neatly assigned to “error” or “misuse”; the system itself has moved. Replication, strictly conceived, is not available. “Re-performance” is the best that can be done, and its interpretation is entangled with change.

6) Operational Adequacy: What Can Be Tested, Plainly and Locally

A practical, non-jargon way to check CLT-based procedures in open systems focuses on consequences that matter for action:

1. Pick a concrete average. Example: weekly average wait time in an emergency department.

2. Work within a slow-change window. Choose a period before obvious regime shifts.

3. Make a next-step range. Using past data, produce a “95% likely range” for the next week’s average.

4. Record hits and misses prospectively. Tally how often the next week’s reality lands inside the range.

5. Cross-check with a simple resampling yardstick. Reuse past weeks in many re-shufflings to form an alternative range without normal-curve math; compare tallies.

6. Stress lightly. Introduce small, controlled tweaks if feasible; watch whether the tally collapses.

7. Slice smartly. Check subgroups and time slices relevant to decisions.

Meaning of a pass. Ranges hit their marks near the claimed rates across windows and subgroups; the simple resampling check agrees within tolerances; tiny nudges do not wreck performance.

Meaning of a fail. For this window, this task, this system, the CLT-based method is operationally inadequate. No verdict about the theorem; only a mismatch with the world.

7) Real-World Practices That Resemble This Check

These activities rarely advertise themselves as “testing the CLT,” but they embody the same idea—judge the tool by empirical operating characteristics:

Weather forecast verification. Do stated ranges contain realized temperatures at the promised frequency?

Industrial process control. Do batch averages cross “out-of-bounds” at the expected rarity? If not, limits or assumptions are revised.

Hospital monitoring. Do weekly averages (waits, infections) behave as “normal variation” suggests? Deviations trigger method review or action.

Financial risk backtesting. Do losses exceed the predicted “worst-case” threshold at the advertised rate? Repeated misses signal model failure.

Sports analytics. Do performance intervals separate usual streaks from unusual ones at claimed frequencies?

All are plainly empirical, local, and provisional. None establish universal truth about the CLT; all ask whether the chosen approximation behaves as promised for the job at hand.

8) Why the Leap Persists (Conjectural)

The following is explicitly conjectural:

Enculturation in formalism. Training emphasizes internal derivations; habit blurs the border between model and world.

Pedagogical tidiness. Courses smooth over relevance and replication limits to keep the story teachable.

Institutional incentives. Publishing rewards results, not domain-specific validation of assumptions.

Framework disputes. Energy is spent on frequentist vs. Bayesian debates, not on the orthogonal issue of relevance in unstable systems.

Cognitive economy. “Assumptions true ⇒ conclusions true” functions as a shortcut that saves work, until it fails.

9) Objections to “Proof as Argument” That Avoid Platonism — And Why They Fail

Formalist reduction. Defines proof as a syntactic derivation. Fails because syntax and rules are humanly chosen, and mapping from informal mathematics to formal code is interpretive.

Mechanized verification. Offloads checking to computers. Fails because humans still choose the calculus, encode the claim, and interpret the result’s meaning and relevance.

Sociological legitimation. Treats proof as compliance with communal norms. Concedes the point: acceptance is social and rhetorical.

Pragmatist instrumentality. Treats proof as a tool for extending work. Still depends on persuading a community that the result is reliable enough to use.

No-Escape Conclusion. Without Platonism, proof depends on human understanding. There is nowhere else for it to live.

10) Practical Stance for Work in Open, Unstable Domains

Treat applicability as a conjecture to be stress-tested, not a given.

Prioritize relevance diagnostics over assumption policing: identify which features actually drive outcomes for the decision.

Evaluate local operating characteristics prospectively; accept only conditional, windowed warrants.

Record when methods succeed despite violations or fail despite satisfaction; update practice based on those empirical asymmetries.

When objectives hinge on tails, thresholds, heterogeneity, interference, or regime changes, recognize that CLT-style averaging may be irrelevant by design.

Summary

Three separations anchor the position:

1. Proof vs. World. Proof is a human, language-bound practice. Absent Platonism, its authority is cognitive and social.

2. Theorem vs. Applicability. A theorem binds assumptions to conclusions only inside mathematics. Claims about the world require relevance and empirical warrant; the common positive and negative meta-assumptions are conjectures.

3. Closed vs. Open Systems. Replication is coherent in closed systems and conceptually broken in unstable open systems. The CLT cannot be proved applicable there; at best, CLT-based procedures can earn local, provisional warrants through prospective performance.

These separations do not denigrate mathematics. They restore the boundary between formal results and empirical reality, and they make explicit the human, linguistic, and biological substrate on which all proving and all modeling ultimately depend.

Readings

Box, G. E. P., & Draper, N. R. (1987). Empirical model-building and response surfaces. New York, NY: Wiley.

— Classic slogan “All models are wrong, but some are useful” in its proper context: models as pragmatic tools. Emphasizes empirical checking over formal guarantees.

Cartwright, N. (1983). How the laws of physics lie. Oxford, UK: Oxford University Press.

— Argues that laws succeed locally and under ceteris paribus conditions; relevance and fit to practice trump universal pretensions.

Freedman, D. A. (1991). Statistical models and shoe leather. Sociological Methodology, 21, 291–313.

— Demands grounding models in real mechanisms and fieldwork; a sustained critique of automatic application of formal results.

Freedman, D. A. (2009). Statistical models: Theory and practice (rev. ed.). Cambridge, UK: Cambridge University Press.

— Plain-language demonstrations of how model assumptions can be satisfied yet irrelevant, or violated yet inconsequential, depending on the objective.

Hacking, I. (1975). The emergence of probability. Cambridge, UK: Cambridge University Press.

— Historical account of probability as a human invention shaped by problems of the time; useful background for the cognitive-social framing.

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Medicine, 2(8), e124. https://doi.org/10.1371/journal.pmed.0020124

— A landmark on reproducibility framed largely in terms of incentives and misuse; illustrates how the literature often presumes replication is conceptually available.

Lakatos, I. (1976). Proofs and refutations. Cambridge, UK: Cambridge University Press.

— A canonical study of proof as evolving argument within a community; undermines the myth of inexorable deduction.

Mandelbrot, B. (1963). The variation of certain speculative prices. The Journal of Business, 36(4), 394–419.

— Early demonstration that financial returns violate Gaussian assumptions; foundational for fat-tailed alternatives.

Mandelbrot, B., & Hudson, R. L. (2004). The (Mis)Behavior of Markets. New York, NY: Basic Books.

— Popular but rigorous account of fat tails and scaling in markets; a direct challenge to Gaussian smoothing in an unstable domain.

Mayo, D. G. (2018). Statistical inference as severe testing. Cambridge, UK: Cambridge University Press.

— Argues for empirically severe tests; helpful for framing local, prospective checks of operating characteristics.

Polanyi, M. (1966). The tacit dimension. Chicago, IL: University of Chicago Press.

— Frames knowledge (including mathematical practice) as rooted in tacit, embodied skills; fits the cognitive grounding thesis.

Shewhart, W. A. (1931). Economic control of quality of manufactured product. New York, NY: Van Nostrand.

— The original control-chart framework: a semi-stable domain where normal-based limits sometimes work by detecting rarity, not by proving normality.

Taleb, N. N. (2007). The black swan: The impact of the highly improbable. New York, NY: Random House.

— Forceful critique of Gaussian smoothing and the neglect of tail risk; illustrates why CLT-style reasoning can be irrelevant when extremes govern.

Appel, K., & Haken, W. (1977). Every planar map is four colorable. Part I: Discharging. Illinois Journal of Mathematics, 21(3), 429–490.

— A canonical case of computer-assisted proof; useful for discussing why mechanical verification still depends on human interpretation and acceptance.