Considering Boxes and Visibility of Mechanisms

Points on engineering terminology, clarity, and the suitability of terms in various contexts

Note: A nerdish explorations of terms, with phrasing coaxed from ChatGPT 4.0.

Author’s Preface

Getting ready for a discussion on memory, I segue into some engineering terminology. Still, these are not unimportant ideas, and worth knowing about.

Introduction

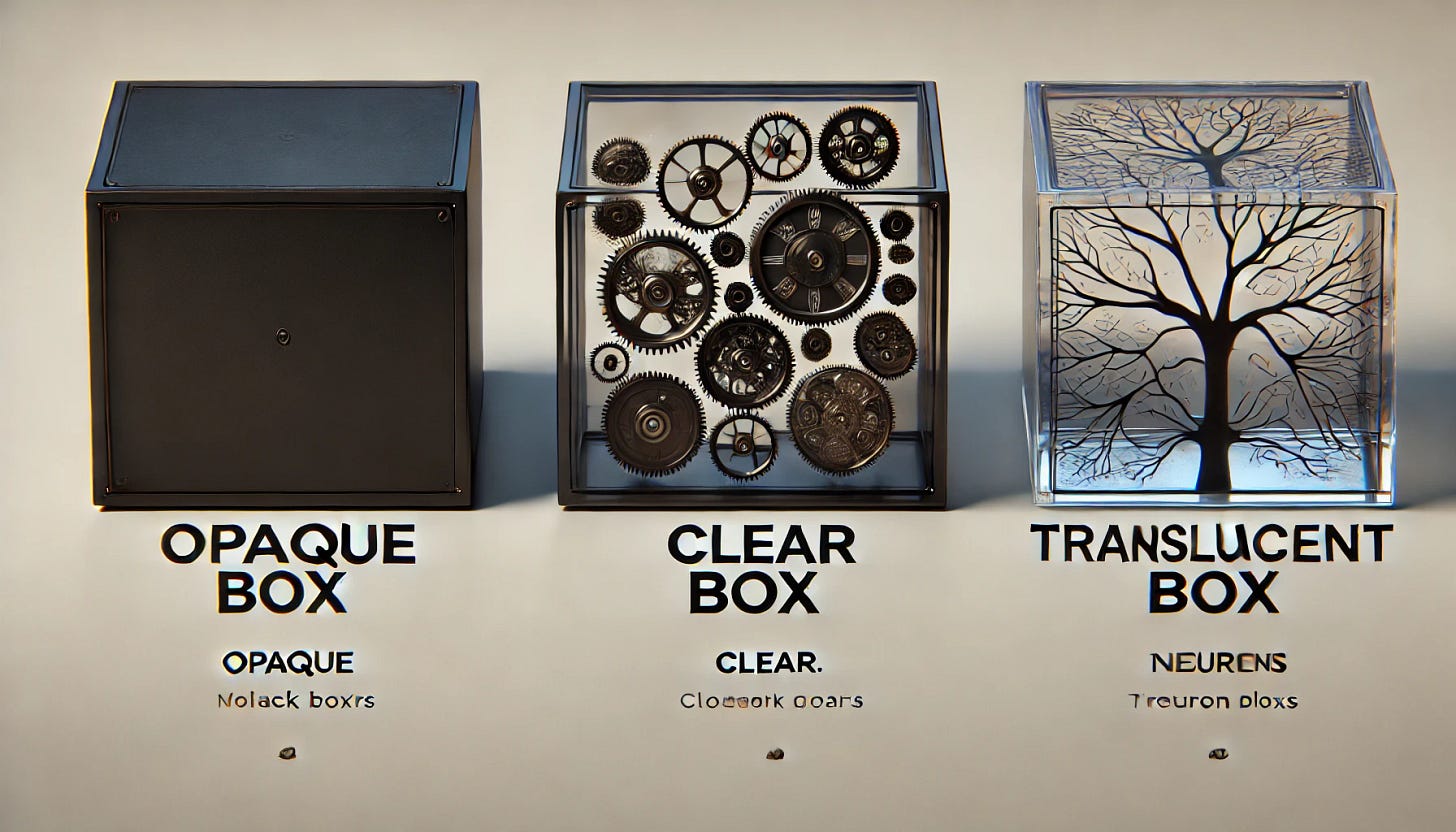

In engineering, systems analysis, and even philosophy, terminology that reflects varying levels of visibility into a system’s inner workings is essential for clarity and accuracy. Traditional terms like "Black Box" and "White Box" are well-known but often inadequate. “Black Box” suggests a closed system, while “White Box” implies total transparency, though few systems achieve this level of openness (Franceschetti, 2013; Shirazi et al., 2019). Here, I expand the vocabulary to include “Opaque/Black Box,” “Clear/Transparent Box,” and “Translucent Box,” with each term representing a degree of understanding. This enhanced terminology is valuable when studying complex systems, such as those in engineering and biological research, where partial visibility is more realistic than full transparency (Craver, 2007). By adopting these terms, we can more precisely characterize the limitations of our knowledge and better manage expectations around what can be known, especially for complex systems that defy straightforward understanding.

Discussion

Opaque/Black Box: While “Opaque Box” is not a common term, it is a fitting synonym for the well-known “Black Box,” as Black Boxes are indeed opaque—they conceal their inner workings (Franceschetti, 2013). In this model, we focus on input-output relationships without insight into the internal mechanisms. Researchers often use mathematical descriptions based solely on observed behaviors, which are practical for analyzing complex or inaccessible systems where input-output analysis is the only feasible approach (Kaplan, 2011). For example, such models are useful in fields where understanding remains limited to behavior without visibility into inner processes, such as certain artificial intelligence applications (Russell & Norvig, 2021).

Clear/Transparent Box: Commonly referred to as a “White Box,” which paradoxically suggests some degree of opacity, the term “Clear Box” or “Transparent Box” more accurately denotes a state of complete visibility. In this model, every component, its responses, and the mathematical transformations within the system are fully understood and accessible. Such transparency is rarely attainable in complex domains, such as biology, due to intricate interactions among parts that limit full comprehension (Craver, 2007). However, Clear Box thinking is possible in simpler systems or controlled environments, where mechanisms are fully accessible, and modeling can be precise and predictive.

Translucent Box: Although “Translucent Box” is not traditionally used in systems analysis, it aptly captures an intermediate understanding, where partial visibility into the system is present but significant gaps remain (Kaplan, 2011). This term is especially relevant in fields like biology, where certain processes—such as memory encoding, consolidation, and retrieval—are partially understood but still contain mysteries (Craver, 2007). Researchers can observe some functions and responses in detail and create models within specific contexts, though much remains hidden. Translucent Box thinking aligns well with the state of knowledge in systems where hypotheses can be formed based on partial visibility but lack complete clarity.

Summary

In essence, Opaque (Black) Box models are useful when knowledge is limited to input-output behaviors, Translucent Box models describe systems with partial visibility and modeling capabilities, and Clear (Transparent) Box models provide a theoretical ideal of full transparency. This continuum, from opaque to clear, is crucial for realistic assessment and communication about complex systems (Franceschetti, 2013; Shirazi et al., 2019). By categorizing systems in this way, we can better frame our expectations and navigate the limitations of our knowledge, especially in domains like biology and AI, where true transparency is often elusive (Craver, 2007; Russell & Norvig, 2021).

References

Craver, C. F. (2007). Explaining the brain: Mechanisms and the mosaic unity of neuroscience. Oxford University Press. https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://global.oup.com/academic/product/explaining-the-brain-9780199299317&ved=2ahUKEwjioPuK5bKJAxWgJjQIHU3sDakQFnoECBYQAQ&usg=AOvVaw3X0bVkqiH7K2PAhJtP40BJ

Franceschetti, D. R. (2013). Encyclopedia of physical science and technology (3rd ed.). Academic Press.

Kaplan, D. M. (2011). How to demarcate the boundaries of cognition? In A. Clark & M. Weber (Eds.), The extended mind: Proceedings of the Edinburgh conference (pp. 19-39). MIT Press. https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://philpapers.org/rec/KAPHTD&ved=2ahUKEwjwsvSc5bKJAxXdHjQIHcB5EKkQFnoECBgQAQ&usg=AOvVaw1awvseyo27iMKTSginVyac

Russell, S., & Norvig, P. (2021). Artificial Intelligence: A modern approach (4th ed.). Pearson. https://www.google.com/url?sa=t&source=web&rct=j&opi=89978449&url=https://dl.ebooksworld.ir/books/Artificial.Intelligence.A.Modern.Approach.4th.Edition.Peter.Norvig.%2520Stuart.Russell.Pearson.9780134610993.EBooksWorld.ir.pdf&ved=2ahUKEwiE1Zyp5bKJAxV4AjQIHfZ2LVcQFnoECBUQAQ&usg=AOvVaw1A9aiae_dsos-LufYLyRkB